Cache or Reserve memory is an essential part of a PC framework that assumes a vital part in further developing in general framework execution. It goes about as a scaffold between the rapid computer chip (Focal Handling Unit) and the generally more slow principal memory (Smash). By putting away regularly gotten to information and guidelines, reserve memory lessens the time expected to recover data, in this way improving the effectiveness of the framework.

Reserve memory capabilities as a brief stockpiling unit that holds a subset of information and guidelines from the primary memory. It works on the standard of territory, taking advantage of the way that projects will generally get to a somewhat little piece of memory over and over or in nearness. By putting away this subset of regularly gotten to information, reserve memory decreases the quantity of gets to the more slow primary memory, which is both tedious and asset serious.

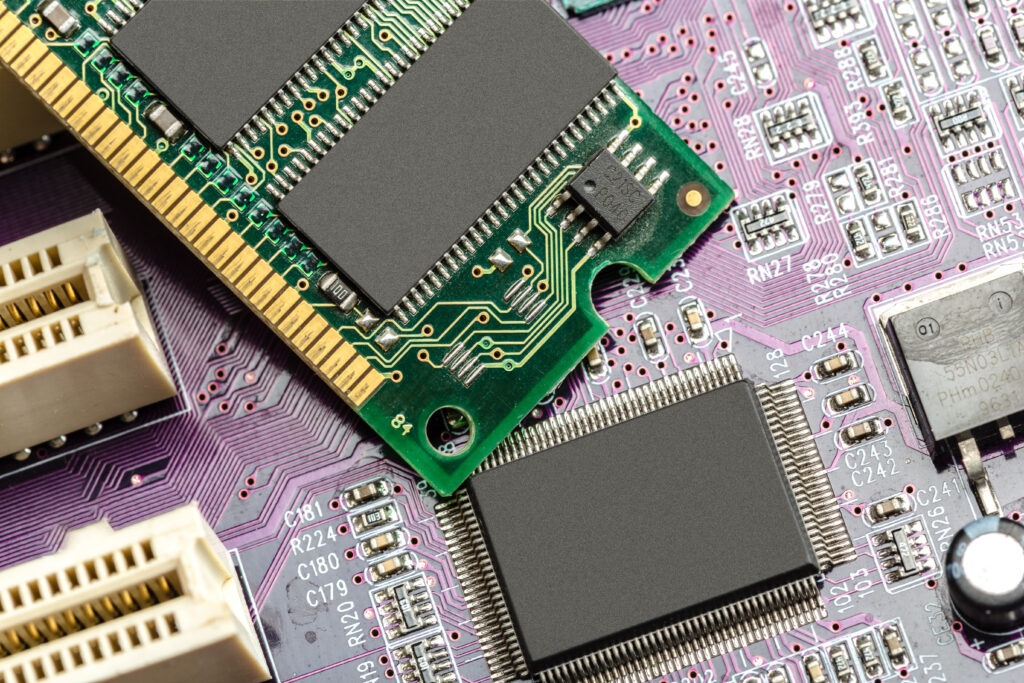

The reserve memory is constructed utilizing high velocity static arbitrary access memory (SRAM) chips. SRAM is more costly than dynamic arbitrary access memory (Measure) yet gives quicker access times and doesn’t need occasional revive. The store memory is partitioned into various levels, normally alluded to as L1, L2, and L3 reserves. L1 store is the nearest to the central processor and the quickest, while L3 reserve is bigger yet somewhat more slow.

At the point when a program is executed, the computer chip first really takes a look at the store memory for the necessary information or guidelines. This interaction is known as a store hit. On the off chance that the information is found in the reserve, it is known as a store hit, and the computer processor recovers the data straightforwardly from the store, keeping away from the need to get to the fundamental memory. This outcomes in altogether diminished admittance time and further developed generally framework execution.

In any case, in the event that the information or directions are absent in the reserve memory, it prompts a store miss. For this situation, the central processor needs to recover the expected data from the fundamental memory and store it in the reserve for sometime later. Furthermore, the store might utilize substitution calculations to figure out which information ought to be ousted from the reserve to account for new information.

Reserve memory uses two essential methods to expand its viability: spatial territory and fleeting region. Spatial territory alludes to the inclination of a program to get to memory areas that are near one another. Reserve memory exploits this by putting away touching blocks of memory, lessening the quantity of store misses.

Worldly region, then again, alludes to the probability of a program getting to similar memory area on various occasions inside a brief period. Reserve memory takes advantage of this way of behaving by holding as of late gotten to information and guidelines, expanding the possibilities of store hits.

The size of the store memory, as well as its association, extraordinarily influences its presentation. Bigger reserves can store more information, diminishing reserve misses. Be that as it may, bigger reserves are more costly and occupy more actual room. In this way, reserve memory is intended to find some kind of harmony between cost, execution, and actual requirements.

Reserve memory is a fundamental part of current PC frameworks, empowering productive information recovery and further developing generally framework execution. It goes about as a cushion between the computer chip and the fundamental memory, taking advantage of the standards of spatial and fleeting territory to store habitually got to information and directions. By lessening the quantity of gets to the more slow fundamental memory, reserve memory assumes a pivotal part in speeding up program execution and improving the client experience.